2014_Ch.17_Notes

Transcript of 2014_Ch.17_Notes

-

8/17/2019 2014_Ch.17_Notes

1/6

QMDS 202 Data Analysis and Modeling

Chapter 17 Multiple Regression

Model and Required Conditions

For k independent variables (predicting variables) x1, x2, … , xk , the multiple linear

regression model is represented b the !ollo"ing equation#

ε β β β β +++++= k k x x x y $$$2211%

"here β 1, β 2, … , β k are population regression coe!!icients o! x1, x2, … , xk

respectivel, β % is the constant term, and ε (the &reek letter epsilon) represents the

random term (also called the error variable) ' the di!!erence bet"een the actual value

o! Y and the estimated value o! Y based on the values o! the independent variables$

he random term thus accounts !or all other independent variables that are not

included in the model$

Required Conditions !or the rror *ariable#

1$ he probabilit distribution o! the error variable ε is normal$

2$ he mean o! the error variable is %$

+$ he standard deviation o! ε is ε σ , "hich is constant !or each value o! x$

$ he errors are independent$

he general !orm o! the sample regression equation is e-pressed as !ollo"#

k k xb xb xbb y ++++= $$$. 2211%

"here b1, b2, … , bk are sample linear regression coe!!icients o! x1, x2, … , xk respectivel and b% is the constant o! the equation$

For k / 2, the sample regression equation is 2211%. xb xbb y ++= "here b%, b1, and b2can be !ound b solving a sstem o! three normal equations#

Σ+Σ+Σ=Σ

Σ+Σ+Σ=Σ

Σ+Σ+=Σ

2

222112%2

212

2

111%1

2211%

xb x xb xb y x

x xb xb xb y x

xb xbnb y

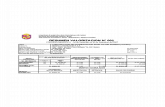

-ample 1

1 x

2 x y y x

1 y x

2 21 x x 2

1 x 2

2 x y.

1 2%% 1%% 1%% 2%%%% 2%% 1 %%%% 02$

3%% +%% 1%% 21%%%% +%% 2 %%%% +%$2%

0 0%% %% +2%% +2%%%% 4%% 4 4%%%% +$3+

4 %% 2%% 12%% 0%%%% 2%% +4 14%%%% 21$+ 1%% 1%% +%% 1%%%% +%% 1%%%% $2

1

-

8/17/2019 2014_Ch.17_Notes

2/6

1% 4%% %% %%% 2%%%% 4%%% 1%% +4%%%% +3$41

++ 20%% 1%% 1%+%% 00%%%% 100%% 2+ 13%%%%% 1%%

n / 4

++=

++=++=

21%

21%

21%

13%%%%%100%%20%%00%%%%

100%%2+++1%+%%

20%%++41%%

bbb

bbbbbb

5 solving the above sstem o! normal equations, "e should !ind the !ollo"ing#

b% / 4$+3 b1 / 2%$2 b2 / %$20%

∴ he sample multiple linear regression equation is#

21 20%$%,2$2%+3$4. x x y ++=

6nterpretation o! the Regression Coe!!icients

b1# the appro-imate change in y i! x1 is increased b 1 unit and x2 is held constant$

b2# the appro-imate change in y i! x2 is increased b 1 unit and x1 is held constant$

6n -ample 1, i! x1 is increased b 1 unit and x2 is held constant, then the appro-imate

change in y there!ore "ill be 2%$2 units$

7oint stimate

6n -ample 1, suppose x1 / and x2 / %%, then the point estimate o! y equals#41$220)%%(20%$%),(,2$2%+3$4. =++= y

he 8tandard rror o! stimate in Multiple Regression Model

( )

1

. 2

−−

−Σ=

k n

y y s iiε

"here i y / the observed y value in the sample

i y. / the estimated y value calculated !rom the multiple regression equation

6n -ample 1, 2).( ii y y −

(13$%1)200$20

124

,$2%2=

−−=

ε s

(9$2)2

($23)2

(91$)2

($%0)2

(2%$+)2

2

-

8/17/2019 2014_Ch.17_Notes

3/6

2%2$

:ote# ε s is the point estimate o! ε σ (the standard deviation o! the error variable ε $)

esting the *alidit o! the Model ' he ;nalsis o! *ariance (;:s consider a simple linear regression model#

y

? y / Σ y @ n / the mean o! y

? ? y

? ?

x

).().()( iiii y y y y y y −+−=−

⇒ ).().()( iiii y y y y y y −Σ+−Σ=−Σ

( ) y yi −Σ / total deviations

( ) y yi −Σ . / total deviations o! estimated values !rom the mean( )

ii y y .−Σ / error deviations / ieΣ

iii y ye .−= / the residual o! the ith data point

222).().()( iiii y y y y y y −Σ+−Σ≈−Σ

⇒ 88 / 88R A 88

88 / total sum o! squared deviations / total variation

88R / sum o! squares resulting !rom regression / e-plained variation

88 / sum o! squares resulting !rom sampling error / une-plained variation

he ;:

-

8/17/2019 2014_Ch.17_Notes

4/6

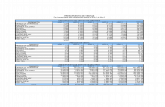

(Re!er to the associated computer output o! this e-ample)

B%# he regression model is not signi!icant (β1 / β2 / … / βk / %)

B1# he regression model is signi!icant (;t least one βi ≠ %)

α / %$% d! 1 / k / 2 d! 2 / n ' k ' 1 / 4 ' 2 ' 1 / +

Critical value / $

est statistic / $ $ ⇒ ReDect B%Ee can also use the p9value provided b the output to arrive at the conclusion#

p9value / %$%%+ α / %$% ⇒ ReDect B%

∴ he regression model is signi!icant$ (here is at least one independent variable that

can e-plain G$)

66 he t 9ests !or Regression Coe!!icients (8lopes)

; t 9test is used to determine i! there is a meaning!ul relationship bet"een the

dependent variable and one o! the independent variables$

6n -ample 1, the t 9test !or H1 (again re!er to the computer output o! this e-ample)#

B%# H1 is not a signi!icant independent variable (β1 / %)

B1# H1 is a signi!icant independent variable (β1 ≠ %)

α / %$% α@2 / %$%2 df / n ' k ' 1 / 4 ' 2 ' 1 / +

Critical values / ± +$102

ReDect B% i! 8 −+$102 or 8 +$102

1

%11 )(

bS

bTS

β −= "here 1bS / estimated standard deviation o! b1

,0$+002$

%,2$2%=

−=TS +$102 ⇒ ReDect B%

p9value approach#

p9value / %$% α / %$% ⇒ ReDect B%

∴ he slope β1 is signi!icant, that is, there is a meaning!ul relationship bet"een H1and G$

he t 9test !or H2#

B%# H2 is not a signi!icant independent variable (β2 / %)

B1# H2 is a signi!icant independent variable (β2 ≠ %)

α / %$% α@2 / %$%2 df / n ' k ' 1 / 4 ' 2 ' 1 / +

Critical values / ± +$102

ReDect B% i! 8 −+$102 or 8 +$102

2

%22 )(

bS

bTS

β −= "here 2bS / estimated standard deviation o! b2

%0$,%4$%

%20%$% =−

=TS +$102 ⇒ ReDect B%

-

8/17/2019 2014_Ch.17_Notes

5/6

p9value approach#

p9value / %$%24 α / %$% ⇒ ReDect B%

∴ H2 is also a signi!icant independent variable$

6n case there are some insigni!icant independent variables in the model (the p9values

o! some regression coe!!icients are bigger than α), "e should take out the most

insigni!icant variable !rom the model (the one "ith the highest p9value) and run the

regression !unction once again b using onl the remaining variables$ hen "e

observe the p9values o! the coe!!icients in this ne" model and repeat the same

procedure (i! necessar) until all the p9values are less than α$

he Coe!!icient o! Multiple Ietermination (R2 )

iationtotal

iationlained

SST

SSR R

var

var e-p2==

6n -ample 1, 3,$%2%+2,3

2,32 =+

= R

Ee can conclude that 3$J o! the variation in G is e-plained b using H 1 and H2 as

independent variables$

he ;dDusted R2

he adDusted R 2 has been adDusted to take into account the sample siKe and the number

o! independent variables$ he rationale !or this statistic is that, i! the number o!

independent variables k is large relative to the sample siKe n, the unadDusted R 2 value

ma be unrealisticall high$

;dDusted R 2 /)1@(

)1@(1

−

−−−

nSST

k nSSE

6! n is considerabl larger than k , the actual and adDusted R 2 values "ill be similar$ 5ut

i! 88 is quite di!!erent !rom % and k is large compared to n, the actual and adDusted

values o! R 2 "ill di!!er substantiall$

2

adj R /)1@(

)1@(1

−

−−−

nSST

k nSSE / ( )

−−

−−−

1

111 2

k n

n R

6n -ample 1,2

adj R / 4$%1%%%

212$0+,1

@%%%

+@4+4$2%21 =−=−

he Multicollinearit 7roblem in Multiple Regression Model

-

8/17/2019 2014_Ch.17_Notes

6/6

Multicollinearit is the name given to the situation in "hich t"o independent

variables (e$g$ Hi and H D) are closel correlated$ 6! this is the case, the values o! the

t"o regression coe!!icients (bi and b D) tend to be unreliable and an estimate made "ith

an equation that uses these values also tends to be unreliable$ his is because, i! H iand H D are closel correlated, values in H D don>t necessaril remain constant "hile Hi

changes$ 6! t"o independent variables are closel correlated, that is, i! their correlation coe!!icient (r) is close to ± 1, a simple solution to solve the

multicollinearit problem is to use Dust one o! them in a multiple regression model$

;s a rule o! thumb, i! r o! Hi and H D is bigger than or equal to %$0, then "e

should drop one o! them !rom the regression model$

6n -ample 1, r o! H1 and H2 / %$31 is not bigger than %$0

⇒ H1 and H2 can be used together in the model$

6nterval stimates !or 7opulation Regression Coe!!icients

he con!idence interval o! βi is !ound b# ibi S t b 2@α ±

d! / n ' k ' 1

6n -ample 1, the J con!idence interval o! β1 is#

2%$2 ± +$102 × $002

/ (1$33 to +$21)

4